“I’m sorry, Dave – I can’t do that.”

“I’ll be back.”

Those chilling words (uttered, respectively, by the rogue spaceship computer Hal in 2001 and Arnold Schwarzenegger as a relentless android in The Terminator) are emblematic of the way that, for decades, Hollywood has portrayed artificial intelligence (AI) as a malevolent force.

In reality, however, AI is a powerful tool for positive change, helping humans address today’s complex scientific, medical, and social challenges.

“From organic chemistry to astrophysics, our faculty members and students are applying advanced computing and data science tools to gain fresh insights into their fields of study,” says Dean Leonidas Bachas. “The College of Arts & Sciences is on the leading edge of discoveries and advances that will have a profound impact on our world.”

Here are several examples of the ways in which researchers in the College of Arts & Sciences are using these powerful tools.

Decoding Genetic Disorders

When studying rare genetic conditions, researchers run into a data-related roadblock. About 20,000 protein-coding genes and millions of mutations need to be analyzed to find possible correlations and causes – a number much larger than available patient samples, according to Vanessa Aguiar-Pulido, an assistant professor of computer science.

“Machine learning (ML) tools can play an important role in finding the underlying causes of genetic diseases by distinguishing between healthy individuals and those with a specific disease,” says Aguiar-Pulido. She is working with several collaborators to develop an appropriate model for the data sets, as well as ML tools and algorithms to impact the diagnosis and therapeutics of rare and complex genetic disorders.

“Machine learning (ML) tools can play an important role in finding the underlying causes of genetic diseases by distinguishing between healthy individuals and those with a specific disease,” says Aguiar-Pulido. She is working with several collaborators to develop an appropriate model for the data sets, as well as ML tools and algorithms to impact the diagnosis and therapeutics of rare and complex genetic disorders.

Aguiar-Pulido teamed with Dr. Stephan Zuchner, a professor in and the chair of the Department of Genetics at the Miller School of Medicine, to study the genetics of hereditary spastic paraplegia, and with researchers at Weill Cornell Medicine to study congenital neural tube defects such as spina bifida. Other study targets include epilepsy and schizophrenia.

“Because we can’t check the mutations on thousands of patients, we need to find creative ways to filter those that may predispose an individual to a disease or affect the severity of symptoms,” Aguiar-Pulido says. “Bringing computer science tools to the biomedical sector can help us better understand these disorders and determine how these findings can be applied to clinical practice.”

Strides in Robotics

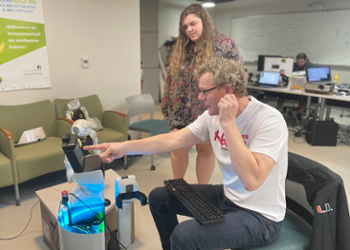

For more than 30 years, Ubbo Visser, an associate professor of computer science and a distinguished faculty member of the Miller School’s Center for Cognitive Science and Aging, has been looking for ways to use robotics and AI to help people in their daily lives.

“There are many ways we can benefit from intelligent machines, such as search and rescue missions into the rubble of buildings or assisting the elderly who live alone,” he says.

Visser is also developing ways for intelligent robots to work together as a team, communicating with each other in real-time dynamic environments. He serves as a trustee of the RoboCup Federation, the official body of the world’s largest AI and robotics community, which runs the RoboCup Soccer Symposium and the RoboCup Soccer WorldCup.

Visser is also developing ways for intelligent robots to work together as a team, communicating with each other in real-time dynamic environments. He serves as a trustee of the RoboCup Federation, the official body of the world’s largest AI and robotics community, which runs the RoboCup Soccer Symposium and the RoboCup Soccer WorldCup.

“The University’s RoboCanes team uses cameras and relative positions to determine location and orientation on the field,” he says. “Each robot is autonomous, sharing information so that other robots are able to estimate the position of objects on the field – such as where the ball is or where other robot opponents are located.”

In his laboratory, Visser is training one of the 15 Toyota human support robots in the U.S. to perform certain household tasks.

“Putting seniors into residential facilities creates a huge financial burden on them as well as our health care system,” he says. “If we could deploy service robots in the home, we can help the elderly maintain their independence for as long as possible.”

Along with learning how to manipulate and pick up objects from the floor, a human-support robot should be able to understand simple commands and communicate with humans appropriately, Visser notes.

“We bring psychology, such as building rapport or picking up signs of distress in an individual’s voice, into the picture,” he says. “There have been many advances in natural language programming through deep learning, a technique that teaches computers to do what comes naturally to humans. With AI, we’re hoping to see advances in the next few years.

Gaining on Biofuel Efficiency

One of the challenges facing the renewable energy sector is the difficulty of extracting impurities from biofuels. Orlando Acevedo, a professor of chemistry, will be using AI tools to search for environmentally friendly cleanup solvents and accelerate the transition from fossil fuels.

“Exploring chemical reactions through simulations now requires costly and time-consuming supercomputers,” says Acevedo. “It can take six months or longer to determine if one chemical reaction is effective.”

With support from a University award and a three-year grant from the National Science Foundation (NSF) for his study “Machine Learning Tools for Biofuel Creation and Purification using Ionic Fluids,” Acevedo will be training a computer on basic chemical reactions and letting the ML algorithm provide examples of the results. “Eventually, these tools could be run on a laptop rather than a supercomputer,” he says.

Along with the potential benefits to the transportation sector, Acevedo believes the project has wider applications. “Machine learning can be applied throughout the field of chemistry,” he says. “We make our tools available to other institutions to support collaborative initiatives.”

Fine-Tuning Health Care

Artificial intelligence can be integrated throughout the health care system, benefiting patients, families, and providers, according to Yelena Yesha, a professor of computer science, innovation officer and program director of AI and machine learning for the UM Institute of Data Science and Computing and who also serves as the Knight Foundation Chair of Data Science and AI.

“AI and ML technologies can derive new insights from the vast amount of data generated during the delivery of health care every day,” says Yesha.

Examples include earlier disease detection, more accurate diagnosis, and the development of personalized diagnostics and therapeutics. “One of the most significant benefits of AI/ML in software is the ability to learn from real-world experience and improve performance,” she notes.

Examples include earlier disease detection, more accurate diagnosis, and the development of personalized diagnostics and therapeutics. “One of the most significant benefits of AI/ML in software is the ability to learn from real-world experience and improve performance,” she notes.

Yesha says health IT companies and regulators like the U.S. Food and Drug Administration need to accelerate the development and approval of “software as a medical device” for AI-based applications. “There are many opportunities to build and deploy systems to support improvements in patient care,” she says.

To help fast-track the process, she is collaborating with researchers at Rockefeller Neuroscience Institute and West Virginia University in a consortium that brings together multidisciplinary expertise in large radiology systems, medical imaging analytics, clinical data management, real-world evidence collection, and regulatory access to extensive clinical flow and data.

Assessing Medical Risks

“My long-term research goal,” says Liang Liang, an assistant professor of computer science, “is to improve people’s lives.”

Toward that end, he is developing computational methods to improve medical diagnosis and treatment.

For the past few years, Liang has been developing machine learning-based methods for assessing the risk of a deadly thoracic aortic aneurysm (TAA) rupture. Aortic aneurysm disease creates a bulge in the main blood vessel flowing out of the heart, and a sudden rupture can lead to immediate death. While surgeons can repair a bulging aorta before it bursts, that decision is now based on aneurysm size, which has only a weak correlation with the actual risk of rupture.

Liang and his research collaborators at Yale University and Sutra Medical recently received a five-year grant from the National Institutes of Health to apply an ML-based biomechanical analysis approach to identify other risk factors and improve clinical assessments.

For this project, Liang is developing methods to resolve many computational challenges, such as the difficulty of linking patient data with TAA causes. Current biomechanical analysis methods are burdened with a high computation time cost that prevents the use of risk assessment models in a clinical setting.

For this project, Liang is developing methods to resolve many computational challenges, such as the difficulty of linking patient data with TAA causes. Current biomechanical analysis methods are burdened with a high computation time cost that prevents the use of risk assessment models in a clinical setting.

“With the new risk assessment approach we are developing, we hope to identify high-risk patients who are candidates for aneurysm surgery,” Liang says. “This work has the potential to save millions of lives around the world.”

Measurements To Max Out Returns

Machine learning tools have improved and automated a range of financial practices, according to Bahman Angoshtari, an assistant professor of mathematics.

“Robo-fiancial advisors are automated portfolio managers that have become widely popular in recent years,” he says. “However, classic portfolio choice models are not suitable for robo-advising because they do not properly capture the interaction between a robo-advisor and its clients.”

Angoshtari is studying a more flexible alternative called forward performance measurement that more closely models client/robo-advisor interaction and would facilitate the use of ML in robo-advising.

“Instead of fixing a model for the whole investment horizon, this approach starts with an initial model for a much shorter horizon and updates the model with each interaction through time, reflecting the changing nature of the market and client expectations.”

Angoshtari is also working on developing a new computational framework that uses artificial neural networks to solve various types of problems in fields such as engineering, biology, management, and finance.

“Currently,” he says, “there is no universal way of solving all these different problems, but deep learning and AI may be able to provide a universal approach.”

Sorting Out Social Media

Stefan Wuchty, an associate professor of computer science, will be using AI and ML tools to detect and analyze harmful content on social media platforms. “AI-based natural language processing tools are our bread-and-butter approaches to find hateful and conspiratorial content, as well as to assess their severity and the language characteristics that make conspiracy theories stick,” he says.

The project, which was launched with a U-LINK grant, recently won a National Science Foundation grant that supports Wuchty and multidisciplinary collaborators including John Funchion, associate professor of English; Joe Uscinski and Casey Klofstad, professors of political science; Michelle Seelig, professor of communication; and Kamal Premaratne and Manohar Murthi, professors of computer engineering.

Wuchty notes that the team also seeks to gain an understanding of the potential unexpected effects of removing conspiracy theories and hateful content from social media platforms. “Our previous research has shown that the removal of content on one platform does not mean that it is really gone, along with its followers,” he says. “Social media platforms play an electronic game of ‘whack-a-mole.’ The same content may show up on a less moderated platform, probably with a vengeance, so our project may offer guidance on content moderation policies for social media platforms.”

Advancing Astrophysics

One of the many mysteries of the universe is the nature of super-massive black holes that shine as active galactic nuclei (AGN) in regions at the center of galaxies. In certain cases, these astral phenomena can emit tremendous levels of light, from the radio band to X- and gamma rays. The radiation is produced by super-massive black holes attracting surrounding material into themselves. Though the Milky Way does not have an AGN, its center is home to a super-massive black hole. Astronomers have identified, so far, more than 5 million AGNs — and the number continues to climb.

“AGNs are complex objects that emit radiation over the electromagnetic spectrum, so large data sets are necessary for every energy band to characterize these objects,” says Alessandro Peca, a doctoral student in physics.

“AGNs are complex objects that emit radiation over the electromagnetic spectrum, so large data sets are necessary for every energy band to characterize these objects,” says Alessandro Peca, a doctoral student in physics.

Under the direction of Nico Cappelluti, assistant professor of physics, doctoral students Peca and Sicong Huang are collaborating with computer scientists Jerry Bonnell and Jack McKeown on a long-term project to study and map all the known AGN and supermassive black holes in the universe.

With funding from the NASA Chandra X-ray observatory and a pending NSF application, the project applies ML techniques to massive sky observation datasets, which have recently increased from gigabytes to terabytes and petabytes.

“This work is highly relevant to the astronomy community,” says Bonnell. “Many institutions conduct their own observations and publish catalogs with stars, galaxies, active black holes, and other celestial objects. We are building a centralized repository for these data sets. It’s a challenging task to merge them without introducing errors.”

McKeown says ML tools will be vital to identify AGNs in astronomy catalogs and classify them accurately using their spectral energy distribution. “Some types of radiation are highly correlated to certain objects,” he added. “So, once we have some of the spectral data, we can use ML to fill in the blanks and determine the nature of the object.”

Peca noted that the UM team has already tested an application of ML on a catalog with more than 4 million AGNs and generated results that would have taken several years with traditional analysis methods in just five hours.

Reflecting on the project, Peca says astrophysics are expecting a data tsunami in the next five to ten years that will mandate changes to the standard methodologies used in scientific research. “Astrophysics is the keystone to develop and implement new ML big data techniques,” he says. “When these methods will be validated, they can certainly be applied in other scientific disciplines.”