Researchers who set out to help social media platforms and law enforcement dismantle online hate groups have developed a model that shows how tight-knit social clusters form resilient "global hate highways" that bridge online social networks, geographic borders, languages, and ideologies.

In a study published Wednesday in the journal Nature, researchers from the University of Miami and George Washington University found that banning a given hate group from a given social media platform has the unintended consequence of strengthening, rather than weakening, the group because it can quickly self-repair and rewire elsewhere—capturing new recruits like an “all-encompassing flytrap.”

“It is essentially a whack-the-mole game. Once you whack one mole it will show up somewhere else,” said senior author Stefan Wuchty, associate professor in UM’s Department of Computer Science who helped conceive and supervise the study funded in part by a Phase I grant from the University of Miami Laboratory for Integrative Knowledge, or U-LINK. “It is counter-intuitive, but pushing hate groups from social media platforms actually has the opposite effect. It creates a more concentrated assembly of those groups on a different platform.”

As a supplement to their study, which comes amid a spate of hate-driven violent attacks against gays in Orlando, Jews in Pittsburg, Muslims in New Zealand, and Hispanics in El Paso, to name a few, the researchers recommended a number of strategies that social media platforms could pursue to thwart online hate groups. Chief among them was working in tandem to coordinate, adopt, and enforce uniform anti-hate policies that would act as roadblocks across the global highways of hate.

“Social media platforms work for themselves, and have their own polices. They don’t reach out to each other to connect their policies so by each going it alone they open the door for hate groups to move elsewhere, and grow,” Wuchty said.

In their study the researchers acknowledged that social media companies, which are expected to aggressively eliminate hate content from their platforms while balancing the right of free speech, have an immense challenge. After all, every single day 3-plus billion worldwide internet users create approximately 2.5 quintillion bytes of new data, uploading 4 million hours of YouTube content, 67.3 million new Instagram posts, and 4.3 billion new Facebook messages.

“So there is a big market out there to feed your hate, if you are susceptible to it,” Wuchty said. “There are many groups online that cater to your resentments and weaknesses. It is like an all-encompassing flytrap that can quickly capture new recruits who don’t yet have a clear focus for their hate.”

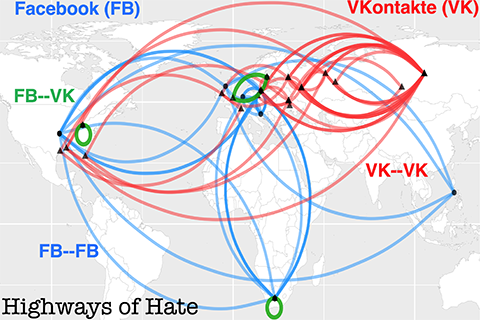

To understand how hate evolves and thrives online, the team began by mapping how clusters interconnect to spread their narratives and attract new recruits. Focusing on social media platforms Facebook and its central European counterpart, VKontakte, the researchers started with a given hate cluster and looked outward to find a second one that was strongly connected to the original. They discovered that hate crosses the boundaries of specific internet platforms, including Instagram, Snapchat and WhatsApp; geographic location, including the United States, South Africa, and parts of Europe; and languages, including English and Russian.

The researchers saw clusters that were banned create new adaptation strategies, such as migrating and reconstituting on other platforms, or using different languages to regroup on other platforms and/or reenter the same platform. This allows the cluster to quickly bring back thousands of supporters to a platform on which they had been banned and highlights the need for cross-platform cooperation.

“The analogy is no matter how much weed killer you place in a yard, the problem will come back, potentially more aggressively,” said Neil Johnson, now a professor of physics at GW who started and led the study while at UM. “In the online world, all yards in the neighborhood are interconnected in a highly complex way—almost like wormholes. This is why individual social media platforms like Facebook need new analysis such as ours to figure out new approaches to push them ahead of the curve.”

Using insights gleaned from its online hate mapping, the team developed four intervention strategies that social media platforms could immediately implement based on situational circumstances:

- Reduce the power and number of large clusters by banning the smaller clusters that feed into them.

- Attack the Achilles’ heel of online hate groups by randomly banning a small fraction of individual users in order to make the global cluster network fall apart.

- Pit large clusters against each other by helping anti-hate clusters find and engage directly with hate clusters.

- Set up intermediary clusters that engage hate groups to help bring out the differences in ideologies between them and make them begin to question their stance.

The researchers noted each of their strategies could be adopted on a global scale and simultaneously across all platforms without having to share the sensitive information of individual users or commercial secrets, which has been a prior stumbling block to cross-platform coordination.

Using their map and its mathematical modeling as a foundation, Wuchty, Johnson, and other members of their team are developing software that could help regulators and enforcement agencies implement the new interventions.

In addition to Wuchty, other UM authors on the study, which was supported in part by a grant from the U.S. Air Force,included recent graduates Minzheng Zhang, Pedro Manrique, and graduate student Prajwal Devkota, who was instrumental in data collection.